“A better model for determining essentiality, in the author’s opinion, is a probabilistic model that tries to estimate the likelihood of a portfolio to be infringed, valid and/or essential.”

In recent years, several patent experts and commentators have claimed that there are too many “low-quality” patents being granted by patent offices around the world, or that a large percentage of patents are often found invalid by courts and judges. Until a patent is found to be invalid by a court or another tribunal, during licensing negotiations both licensor and licensee can only consider the likelihood that such patent is eventually found invalid based on the incomplete information available to them.

In recent years, several patent experts and commentators have claimed that there are too many “low-quality” patents being granted by patent offices around the world, or that a large percentage of patents are often found invalid by courts and judges. Until a patent is found to be invalid by a court or another tribunal, during licensing negotiations both licensor and licensee can only consider the likelihood that such patent is eventually found invalid based on the incomplete information available to them.

Similarly, it has been claimed that a patent-by-patent analysis of a large patent portfolio could determine, without any uncertainty, whether a portfolio is infringed or standard essential. For example, several studies have been published or presented in courts that try to determine which patents in a portfolio are “truly” essential.

Even if one had an infinite amount of time and processing power, and access to all the knowledge ever generated by humanity, the questions above cannot be answered with zero margin of error. To answer those questions, one would need to know how examiners, judges, courts or juries would interpret the claims in light of the prior art, read the specifications, and many other variables.

A better model, in the author’s opinion, is a probabilistic model that tries to estimate the likelihood of a portfolio to be infringed, valid and/or essential. In essence, the model accepts the notion that no patent comes with zero risk to be infringed and/or rendered invalid and tries to estimate that risk associated with a portfolio. Such risk is a function of several considerations, such as: whether the portfolio is being actively licensed, if there is evidence of use associated with it, the available prior art, how large the portfolio is, etc.

In this working paper, we focus on the question of essentiality and validity. We model the likelihood that a patent is essential and/or valid as random variables following a certain probability distribution. We note that in information theory it is common to model unknown data (although those data might be known to a source and/or a receiver) as random variables that can take a value from a certain alphabet with specific probabilities. As such, at least at a macroscopic level, in light of the many variables (see above) and patents, we believe our model is in fact not unreasonable at all. The paper uses a probabilistic model to reach several conclusions for practitioners and regulators.

Literature Review

Several studies exist that have tried to estimate the essentiality of patents that are declared as potentially essential to industry standards. For example, a number of specialized consulting firms have published reports that try to estimate what share of families that were declared as potentially essential to the 4G communication standard were truly essential. Among those, Concur IP, IPLytics, PA Consulting and David Cooper. Dr. Cooper extended his methodology to the 5G communication standard in 2021 in a further study. These reports generally reach significantly divergent results.

For example, the rates of essentiality (the ratio of declared potentially essential families that were found truly essential over the totality of declared potentially essential families) vary wildly between studies: Concur IP (the results of the study can be retrieved in Plaintiff’s Direct Examination by Declaration for Witness Dr. Zhi Ding, TCL v. Ericsson, Case No. 14-00341, Dkt. 1889 (C.D. Cal. Feb. 22, 2018)) estimates a rate of essentiality of 36%, while Dr. Cooper finds a much lower rate, namely a 12% rate for 4G and an 8% rate for 5G for the industry average, excluding Ericsson’s patents. IPLytics simply uses the declarations with minor nominal adjustments, i.e. a de facto essentiality rate of 100%.

We note that the analysis performed by Cooper employs a rigorous and repeatable methodology to assess essentiality, where known experts in the field spent several hours per patent family and mapped their claims to the standard. Such methodology seeks to approach the extensive effort required to claim-chart a portfolio in preparation of a licensing discussion, where rigorous evaluations lead to real-world licensing transactions.

On the other hand, several other studies either do not disclose their methodology or try to determine whether a patent is truly essential by only spending minutes per patent. Furthermore, the analysis is often carried out by “experts” that are either undisclosed or lack credibility or expertise. With the use of Fleiss’ kappa, the 2019 Cooper study shows statistically how several of these studies reach dubious conclusions, heavily disagree with one another, and ultimately do not seem to show the methodological soundness of the 2019 and 2020 Cooper studies.

Probabilistic Model

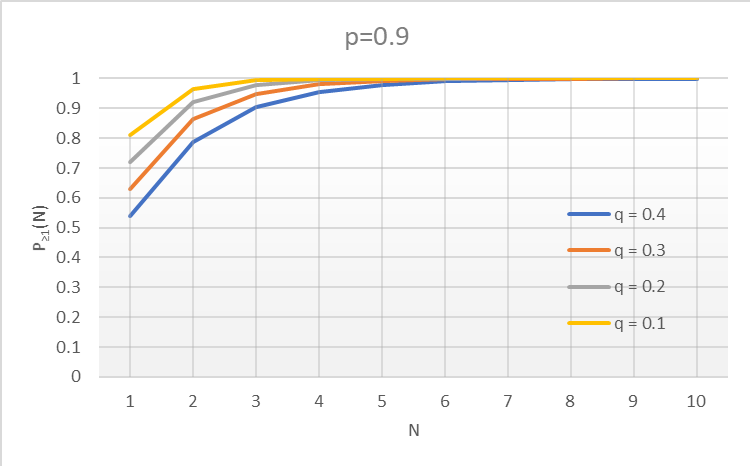

As previously discussed, we use a probabilistic model to analyze the risk associated with a portfolio of patent families either declared as potentially essential to an industry standard or families that, on the other hand, have gone through a rigorous vetting (through claim-charting, for example) and/or have been involved in previous licensing negotiations or litigation. We will also rely on the essentiality rates presented in the Cooper studies and draw some comparisons with other studies.

Let us consider a portfolio of patents with ![]() families potentially essential to a technology standard. Let’s also call:

families potentially essential to a technology standard. Let’s also call:

With the above definitions, assuming essentiality and validity are independent random variables, the probability that a family is essential and valid is ![]() .

.

Let us now define that at least one family in the portfolio is essential and valid as ![]() . In essence,

. In essence, ![]() quantifies the level of risk of infringing the portfolio for an implementer of the standard.

quantifies the level of risk of infringing the portfolio for an implementer of the standard.

We show here that:

![]()

Results

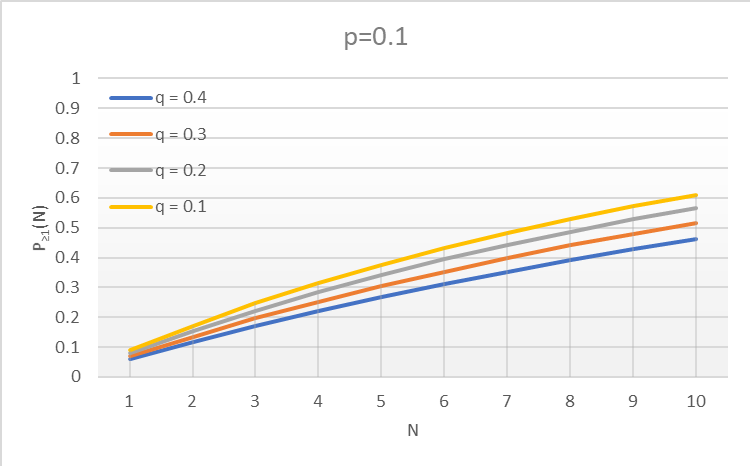

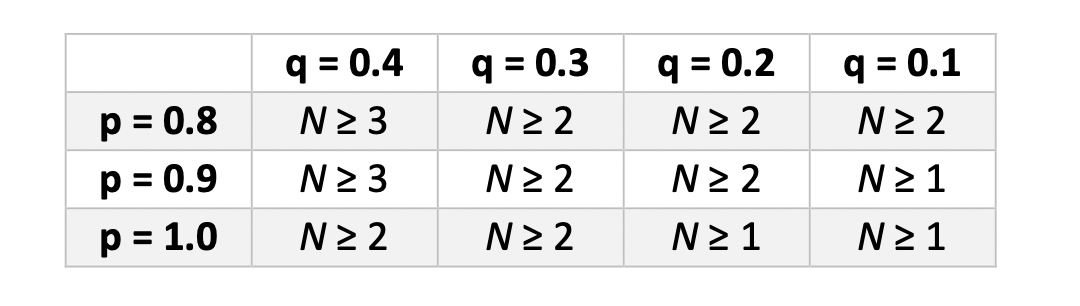

First, we will look at a scenario where a portfolio of patents has only been declared as potentially essential to a Standards Development Organization (SDO). As Cooper has estimated, the probability that a family that has merely been declared as potentially essential to 5G is truly essential is about 9%. The author of that study also estimates the confidence interval to be ![]() . For this first scenario, we thus assume a value of

. For this first scenario, we thus assume a value of ![]() in between the two probabilities, i.e.

in between the two probabilities, i.e. ![]() .

.

According to these assumptions, we plot ![]() for realistic values of

for realistic values of ![]() and

and ![]() below:

below:

As is apparent from these results, a solid indication of essentiality (for example, through claim charts) and validity is important to justify a reasonable risk, especially for smaller portfolios.

As highlighted with the plots, only a solid indication of essentiality (for example, through claim charts) justifies a reasonably high level of risk for a small portfolio. Portfolios, even large, that were merely declared as potentially essential do not command a high level of risk.

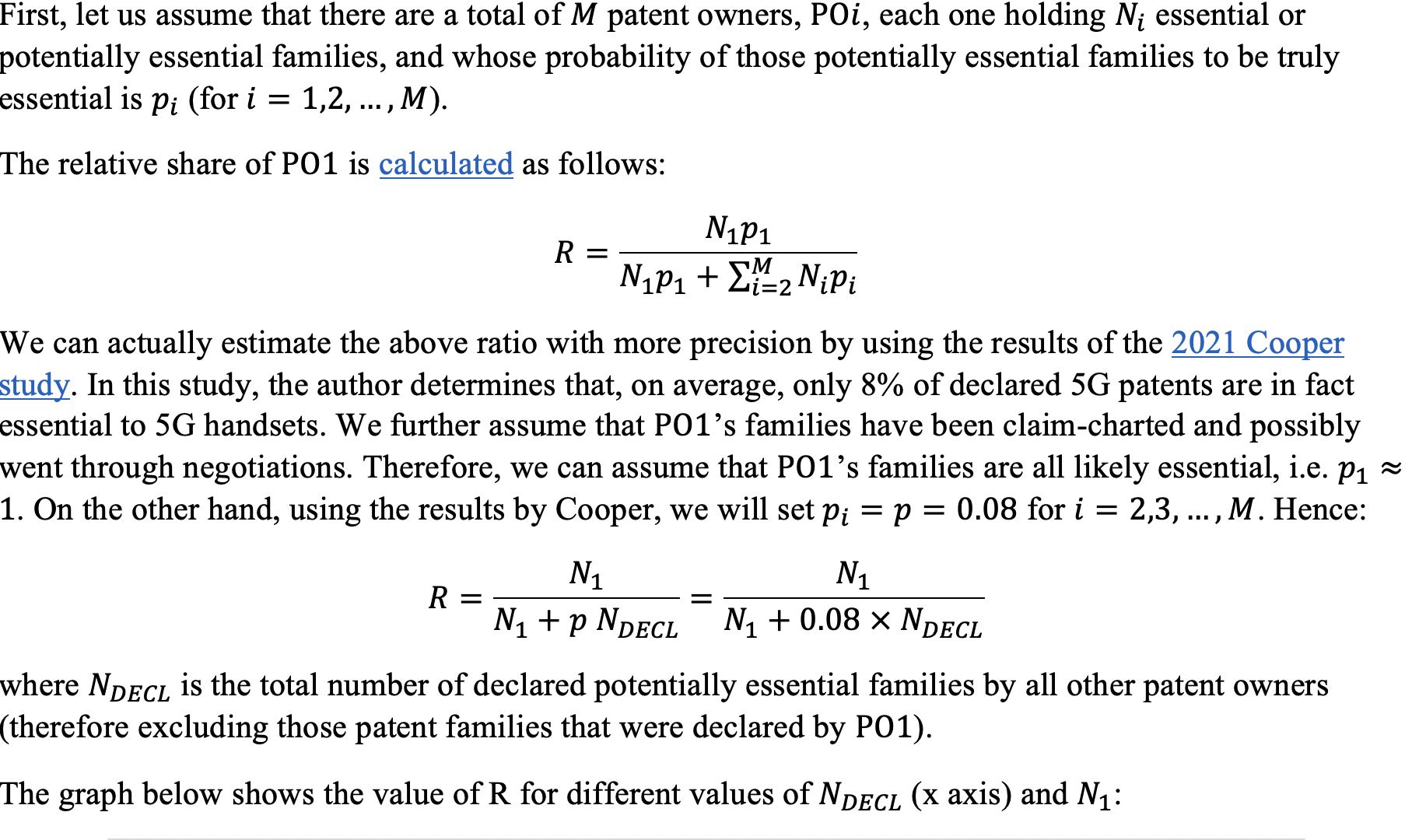

Share of Essential Patents: a Hypothetical

As Cooper has proven, several studies fail to recognize that the probability that a family declared as potentially essential is actually essential is inevitably much lower than the probability of essentiality for families that have gone through substantial scrutiny, have been claim-charted, and possibly have gone through several technical discussions and negotiations. Furthermore, different participants in the development of a standard over-declare at different rates (see Noble et al.). That fact also affects the probability of essentiality for different patent owners.

On the other hand, with a statistical model as described so far in hand, one can attempt to estimate the share of truly essential families by a patent owner over the totality of truly essential families. We will also show how relying on declarations without statistical adjustments, or with flawed statistical adjustments, can lead to absurd and hence totally unreliable results.

The results above confirm that, because of the very low probability of essentiality of families that have simply been declared as potentially essential, the share of a portfolio that has gone through a rigorous analysis remains high even as the number of declared families balloons.

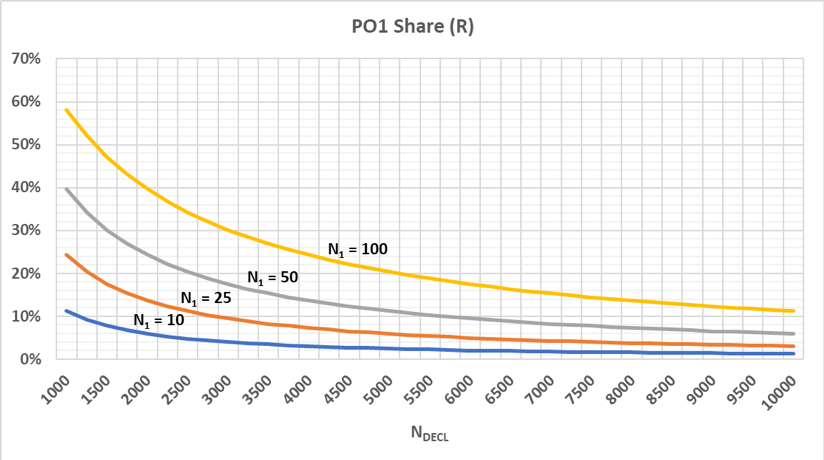

Flawed Top-Down Analysis

Top down analysis has been used to estimate the royalty share of a specific patent owner’s portfolio over the totality of standard-essential patents. We have shown how top down analysis that is relying on studies with flawed methodologies can lead to highly incorrect and problematic results. In particular, the relative share of a patent owner with claim-charted essential families will be heavily impacted by an increase in simply declared patents when flawed studies are used. More specifically, we will compare the statistics in the 2019 Cooper study with those by Concur IP and IPLytics for 4G declarations.

Why are the studies by Concur IP and IPLytics less rigorous than the analysis in the Cooper study? In Concur IP, a mere 30 minutes per patent are spent to determine whether a family is essential. No claim charts are generated from the analysis, and when no determination can be made on the essentiality (or lack thereof) of a patent, the family is assumed to be essential simply by virtue of the fact that it was declared. Through this dubious methodology, an overall average over-declaration rate is estimated to be 64%, hence the probability of a family being essential is roughly 36% ![]() On the other hand, the IPLytics study does not even attempt to estimate the over-declaration rate, and simply uses the raw declaration data. Therefore,

On the other hand, the IPLytics study does not even attempt to estimate the over-declaration rate, and simply uses the raw declaration data. Therefore, ![]()

As the results show, the calculated share of using flawed methodologies decreases dramatically fast as the number of declared-only families increase, regardless of the fact that has claim-charted its families. Therefore, unless a rigorous methodology is used to estimate the over-declaration rate, the share for a specific patent owner rapidly goes to zero as the number of merely declared families increases. This result has fundamental implications for innovation: without sound analysis, the top-down methodology is biased against patent owners and favors infringers, who will always benefit from a much lower (almost zero) royalty rate by any patent owner by simply pointing to the large number of families that have been declared as only potentially essential. The effect on investments for the development of future technology standards could be significant.

Findings

We have described a probabilistic model to estimate the likelihood that a patent portfolio is essential and valid. This probabilistic model reaches several practical conclusions. First, the level of risk depends on many factors, including the size of the portfolio. Particularly for smaller portfolios, solid evidence of essentiality, for example through claim charts, and validity is crucial to justify a relatively high level of risk. Second, this paper demonstrates how a top-down approach in determining royalties is not only fundamentally flawed, but also biased towards infringers, unless a rigorous analysis of essentiality is used that takes into account over-declaration rates.

Image Source: Deposit Photos

Image ID:120637886

Copyright:denisismagilov

![[IPWatchdog Logo]](https://ipwatchdog.com/wp-content/themes/IPWatchdog%20-%202023/assets/images/temp/logo-small@2x.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/Artificial-Intelligence-2024-REPLAY-sidebar-700x500-corrected.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/UnitedLex-May-2-2024-sidebar-700x500-1.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/Patent-Litigation-Masters-2024-sidebar-700x500-1.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/WEBINAR-336-x-280-px.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/2021-Patent-Practice-on-Demand-recorded-Feb-2021-336-x-280.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/Ad-4-The-Invent-Patent-System™.png)

Join the Discussion

2 comments so far.

Phil Snider

July 26, 2021 07:06 am@Anon, I could not agree more. Sabattini talks about validity, but his model is only based on assumptions about essentiality. Also, none of the other research papers he cites even looks at validity. Even more in total he cites 5 research papers out if which 4 are Ericsson commissioned research (Cooper and the Bird&Bird paper that is based on Cooper). The only one neutral study he cites is wrongly cited as this response post shows: https://ipwatchdog.com/2021/07/21/drilling-criticism-top-approach-determining-essentiality/id=135792/.

When reading Sabattini’s full research paper on SSRN it feels as if he turned this upside down. He knew what the outcome of the model should be and went backwards to choose his assumption and model design to confirm his own agenda. And surprise surprise, here we go again, the never-ending Ericsson story about how the top-down approach is biased. This is not neutral economic research but lobbyism. The Ericsson PR very predictable.

Anon

July 16, 2021 11:40 amInfringed, valid and essential are three separate characteristics. Application of ‘probabilistic methods’ should not comingle these.